somehow with this stuff, which I was perhaps near to doing on a few other pages, and don't think is really necessary, but I think this page will be compact and relevant enough, and the subject is broadly applicable to programming in general. As always, comment and add (in separate enough way) at will, as is the rule in these wikis.The von Neumann architecture is the mathematical model which underlies almost all modern computers. This architecture of logical components is essentially a constrained finite state machine (FSM) which can be mathematically modeled as a Turing machine. Such a machine has inputs, outputs, and memory. Any output sequence should be predictable from the input sequence and the initial memory state. This verifiability is key to putting the machine to productive work. The underlying assumption is that the range of possible input sequences and memory states can be defined, and that the machine provably produces exactly one well-defined output for each each of these.In practice this can be narrowed down to a model of machine behavior where only human-understandable input sequences and memory states are defined, along with their hopefully-logical outcome. For example, addition may defined without having to define the almost infinite number of far-fetched logical function mappings no one would ever be interested in. Even the most wildly-creative human brains are interested in only a small subset of the possible mappings.A Turing machine can be modeled by a Finite State Machine with simple separation of memory, input, and the logic or function which determines state transitions. Another function produces an output pattern from the input given a certain state.As logic designers should know, most digital machines can be defined as a finite state machine, either logically or in actual implementation. The existence of a BIST

somehow with this stuff, which I was perhaps near to doing on a few other pages, and don't think is really necessary, but I think this page will be compact and relevant enough, and the subject is broadly applicable to programming in general. As always, comment and add (in separate enough way) at will, as is the rule in these wikis.The von Neumann architecture is the mathematical model which underlies almost all modern computers. This architecture of logical components is essentially a constrained finite state machine (FSM) which can be mathematically modeled as a Turing machine. Such a machine has inputs, outputs, and memory. Any output sequence should be predictable from the input sequence and the initial memory state. This verifiability is key to putting the machine to productive work. The underlying assumption is that the range of possible input sequences and memory states can be defined, and that the machine provably produces exactly one well-defined output for each each of these.In practice this can be narrowed down to a model of machine behavior where only human-understandable input sequences and memory states are defined, along with their hopefully-logical outcome. For example, addition may defined without having to define the almost infinite number of far-fetched logical function mappings no one would ever be interested in. Even the most wildly-creative human brains are interested in only a small subset of the possible mappings.A Turing machine can be modeled by a Finite State Machine with simple separation of memory, input, and the logic or function which determines state transitions. Another function produces an output pattern from the input given a certain state.As logic designers should know, most digital machines can be defined as a finite state machine, either logically or in actual implementation. The existence of a BIST interface in modern Pentium chips is a good example of this: such input/output pins allow a digital tester to enter an almost complete state into the internal memory cells of the chip, apply one or more finite-state progression steps (clock cycles), and serially read out the resulting state.Apart from such unpredictable events as communication bus conflicts, the whole unrolling of events which happens in a PC or workstation is exactly defined by the combination of all system and user programs and states of the machine. One can simply emulate what a Pentium chip does with the machine code it is executing, and know exactly what goes on in the machine, from now to infinity. To visualize this, consider making a complete and errorless disc image from a machine, putting the disc with that copy in a similar machine, adjusting that machine to preferably have the same serial numbers and battery-backed memory contents, and giving it the same configuration and peripherals. When the machine is started up, given the same set of inputs from devices such as a mouse, keyboard and network interfaces, it will exhibit exactly the same behavior as the old machine. It is the logical equivalent of the old machine. Start up the second machine before the first, give both the same inputs at the same temporal offset from startup, and the second machine will predict the behavior of the first. Except for the internet clock service ...Unless one is an EE with the proper setup, however, providing the same inputs, with the same timing, with the precision of the clock cycle O(nano seconds), is often impractical, particularly where imprecise events such as network or human import are concerned.When a computer is conceived as a function, with a distinct starting state as its input (disc content and initial memory content, including CMOS and EEPROMS) and from the time it is switched on having a certain exactly-defined set of temporal inputs down to the very last bit in every little clock cycle, it is easy to imagine that its behavior is entirely predictable. This supposes that one knows what certain components with non-deterministic behavior will do. An extreme example of such a component is an electronic noise generator circuit, which one could characterize but hardly predict exactly. This ability to predict the behavior of a deterministic machine is also precluded by errors such as memory, disc faults, electronic impulses which interfere with the machine, or a nuclear blast that obliterates the machine in milli (pico?) seconds.Something like an Ethernet network can be non-deterministic in nature, where only an electronics expert with accurate and complex probes and knowledge of the circuit can predict what it will exactly do under certain conditions, when packets collide on the network cable, for example. This is not necessarily problematic, but it is also not projectable onto the behavior of the finite state machine or an exactly-known Turing machine composition.A Von Neumann architecture is one in which a processor core is connected to a memory device, with instructions and data passing between the core where computations take place and the memory where all is stored. The connection, whose speed constrains the operation within the architecture, is referred to as the Von Neumann bottleneck. In practice, the memory device is a hierarchical series of devices, but the principle is the same.A parallel architecture is one alternative. I like the example of the Connection Machine

interface in modern Pentium chips is a good example of this: such input/output pins allow a digital tester to enter an almost complete state into the internal memory cells of the chip, apply one or more finite-state progression steps (clock cycles), and serially read out the resulting state.Apart from such unpredictable events as communication bus conflicts, the whole unrolling of events which happens in a PC or workstation is exactly defined by the combination of all system and user programs and states of the machine. One can simply emulate what a Pentium chip does with the machine code it is executing, and know exactly what goes on in the machine, from now to infinity. To visualize this, consider making a complete and errorless disc image from a machine, putting the disc with that copy in a similar machine, adjusting that machine to preferably have the same serial numbers and battery-backed memory contents, and giving it the same configuration and peripherals. When the machine is started up, given the same set of inputs from devices such as a mouse, keyboard and network interfaces, it will exhibit exactly the same behavior as the old machine. It is the logical equivalent of the old machine. Start up the second machine before the first, give both the same inputs at the same temporal offset from startup, and the second machine will predict the behavior of the first. Except for the internet clock service ...Unless one is an EE with the proper setup, however, providing the same inputs, with the same timing, with the precision of the clock cycle O(nano seconds), is often impractical, particularly where imprecise events such as network or human import are concerned.When a computer is conceived as a function, with a distinct starting state as its input (disc content and initial memory content, including CMOS and EEPROMS) and from the time it is switched on having a certain exactly-defined set of temporal inputs down to the very last bit in every little clock cycle, it is easy to imagine that its behavior is entirely predictable. This supposes that one knows what certain components with non-deterministic behavior will do. An extreme example of such a component is an electronic noise generator circuit, which one could characterize but hardly predict exactly. This ability to predict the behavior of a deterministic machine is also precluded by errors such as memory, disc faults, electronic impulses which interfere with the machine, or a nuclear blast that obliterates the machine in milli (pico?) seconds.Something like an Ethernet network can be non-deterministic in nature, where only an electronics expert with accurate and complex probes and knowledge of the circuit can predict what it will exactly do under certain conditions, when packets collide on the network cable, for example. This is not necessarily problematic, but it is also not projectable onto the behavior of the finite state machine or an exactly-known Turing machine composition.A Von Neumann architecture is one in which a processor core is connected to a memory device, with instructions and data passing between the core where computations take place and the memory where all is stored. The connection, whose speed constrains the operation within the architecture, is referred to as the Von Neumann bottleneck. In practice, the memory device is a hierarchical series of devices, but the principle is the same.A parallel architecture is one alternative. I like the example of the Connection Machine , which contains thousands of cells containing lists, and whose basic behavior includes efficient association and communication. Such a machine can also be modeled as a finite state machine, but is not a Von Neumann architecture. Except for graphics card instructions and things like MMX

, which contains thousands of cells containing lists, and whose basic behavior includes efficient association and communication. Such a machine can also be modeled as a finite state machine, but is not a Von Neumann architecture. Except for graphics card instructions and things like MMX , PC's and regular workstations are.In terms of software design, the important point is that the finite state machine, which consists of the processor core, memory, and interfaces, progresses through its states according to a set of rules that are limited by the number of transitions which can be defined for each state. In a finite state machine representing a computer, the lowest level of instruction is assembly code, which is a grand simplification of groups of possible state transitions for the duration of a small number of clock cycles, in a form which is more than sensible enough for certain classes of human beings and therefore understandable and manageable.In the Von Neumann architecture, each instruction is stored in memory, and when executed, causes the finite state machine that is the the computer to traverse the number of states prescribed by the instruction. In other words, the instruction represents some usually-fixed sequence of actions and their corresponding state transitions. A simple instruction may involve a few actions, and a complex instruction may involve hundreds. Older machines and some special contemporary machines can be microprogrammed

, PC's and regular workstations are.In terms of software design, the important point is that the finite state machine, which consists of the processor core, memory, and interfaces, progresses through its states according to a set of rules that are limited by the number of transitions which can be defined for each state. In a finite state machine representing a computer, the lowest level of instruction is assembly code, which is a grand simplification of groups of possible state transitions for the duration of a small number of clock cycles, in a form which is more than sensible enough for certain classes of human beings and therefore understandable and manageable.In the Von Neumann architecture, each instruction is stored in memory, and when executed, causes the finite state machine that is the the computer to traverse the number of states prescribed by the instruction. In other words, the instruction represents some usually-fixed sequence of actions and their corresponding state transitions. A simple instruction may involve a few actions, and a complex instruction may involve hundreds. Older machines and some special contemporary machines can be microprogrammed , meaning that each state transition for some certain machine instruction can be modified.In the end, all compiler output, operating system routines and programs, and all user programs are executed as machine instructions by an operational machine, with completely well-defined and predictable behavior.As an example, an instruction definition from the 1.7 GigaHerz Intel

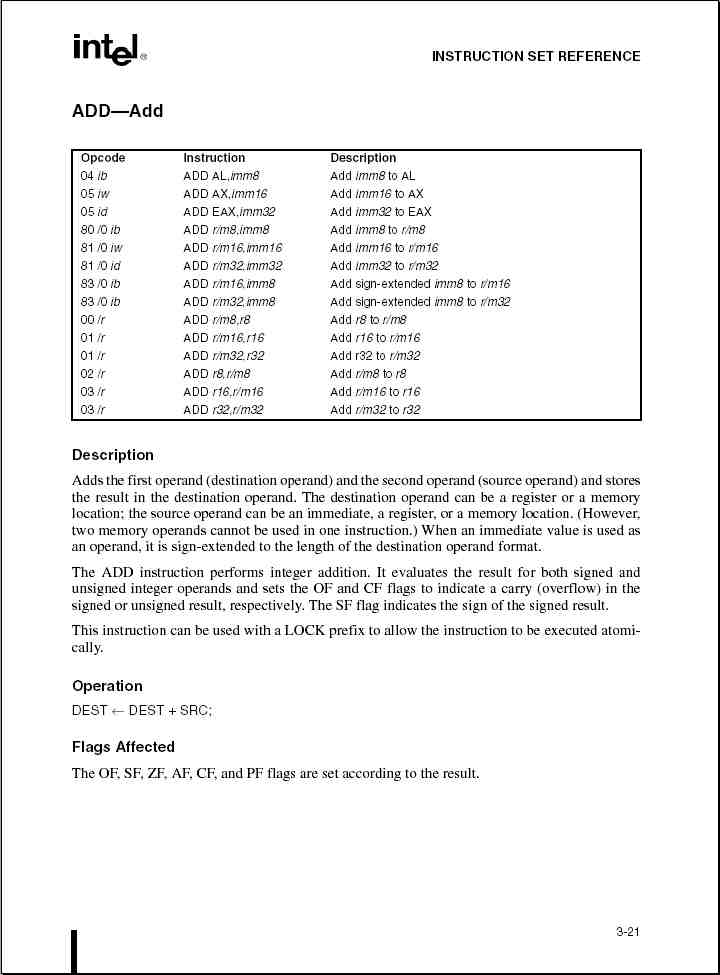

, meaning that each state transition for some certain machine instruction can be modified.In the end, all compiler output, operating system routines and programs, and all user programs are executed as machine instructions by an operational machine, with completely well-defined and predictable behavior.As an example, an instruction definition from the 1.7 GigaHerz Intel Pentium D data sheet: Its from Intel

Pentium D data sheet: Its from Intel

The "add" instruction performs binary addition of the values at the DEST and SOURCE locations, and stores the result at the DEST location. Results are typically stores either in the main memory or in registers on the processor. opcodes

The "add" instruction performs binary addition of the values at the DEST and SOURCE locations, and stores the result at the DEST location. Results are typically stores either in the main memory or in registers on the processor. opcodes are the binary representation of the instruction which is stored at the location the processor is reading its instructions from, and can be seen by inspecting the memory.The relevance for those contemplating programs written in Tcl is clear: everything in the end ends up as instructions in the machine we call a computer. Different makes of computer may have different processors with a different 'opcode' for addition, but most likely also operate on similar operands in comparable variations. Most workstations would also support similar operations, except for instance RISC, which reduces the possibilities per instruction, and CISC processors which maybe can do more with the concept of the addition type of instruction.A compiler takes sub-sequences of program source code and translates them to sequences of machine code, which the link editor then links together, resolving the cross references between various parts of the code.Higher-level languages often implement a stack data structure, and make use of it to pass data between functional units of code, but this a mechanism implemented by that particular higher-level language, and is not fundamental to the native instruction set of the CPU. The C stack is one such example.Not in the picture here, though not strictly outside the FSM model is the occurrence of interrupts, which introduce input to the state machine at certain points in time. In the context of a program, an interrupt diverts control to a unit of instructions that have been designated to handle the interrupt, changing the normal control flow of the program. oThe logical structure of a Tcl list is analogous to the structure of the main memory of a processor: a sequence of locations in which instructions or data may be located. It is not necessarily a physically linear list but, as is the case with memory chips, a sequence of indexed entries.

are the binary representation of the instruction which is stored at the location the processor is reading its instructions from, and can be seen by inspecting the memory.The relevance for those contemplating programs written in Tcl is clear: everything in the end ends up as instructions in the machine we call a computer. Different makes of computer may have different processors with a different 'opcode' for addition, but most likely also operate on similar operands in comparable variations. Most workstations would also support similar operations, except for instance RISC, which reduces the possibilities per instruction, and CISC processors which maybe can do more with the concept of the addition type of instruction.A compiler takes sub-sequences of program source code and translates them to sequences of machine code, which the link editor then links together, resolving the cross references between various parts of the code.Higher-level languages often implement a stack data structure, and make use of it to pass data between functional units of code, but this a mechanism implemented by that particular higher-level language, and is not fundamental to the native instruction set of the CPU. The C stack is one such example.Not in the picture here, though not strictly outside the FSM model is the occurrence of interrupts, which introduce input to the state machine at certain points in time. In the context of a program, an interrupt diverts control to a unit of instructions that have been designated to handle the interrupt, changing the normal control flow of the program. oThe logical structure of a Tcl list is analogous to the structure of the main memory of a processor: a sequence of locations in which instructions or data may be located. It is not necessarily a physically linear list but, as is the case with memory chips, a sequence of indexed entries.DKF: All static (or strictly, all bounded) arrangements of computing systems can be modelled as FSMs. They've all got states and transitions between them, and that's about all you need. You can even model parallelism within a FSM (nondeterminism is useful for this sort of thing) though you'll tend to get much more compact representations when you use nets of communicating machines.Things get a bit more complex when you want to model infinite data or infinite numbers of computing elements... ;^)TV: As a generally interesting problem, we have the famous puzzle of the dining philosophers which, to be resolved, requires knowledge about the initial event(s). A true non-deterministic problem is an Ethernet network. There we have collision detection, hold-off logic, and simultaneous event resolution, which all results in a system whose precise behavior at any given moment is impossible to predict, both in practice and in principle. Also, in network and multitasking OS calls, timing may be unpredictable in practice and/or in principle because of inaccurate interrupt clocks or other unpredictable mechanisms, and therefore models which include such cases must account for this non-deterministic.In practice, infinite computing elements are never fully reached with digital computers. They are all turing-like machines with discrete and therefore bounded memories, and of discrete number and therefore capable of generating bounded contention or assignment problems. Solving a differential equation and making a continuous and possibly infinitely dimensional state is another story.One mathematical issue with the connected machines idea is that it is not a given that combinations of finite state machines are themselves automatically strictly-decent FSM's. At least the initial input for a round-coupled system is uncertain.Graphs of connections of mathematical functions are VERY problematic: two functions with simplistic domains and range connected in a simplistic way will leave you with a resulting 'function' that is no longer a function, but in fact has introduced a state and unknown output even for certain known inputs. (See the example on the Flip Flop page).